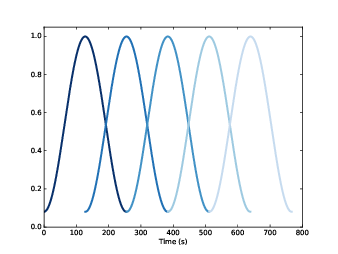

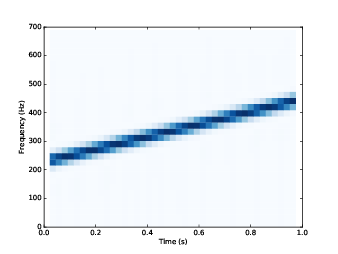

|

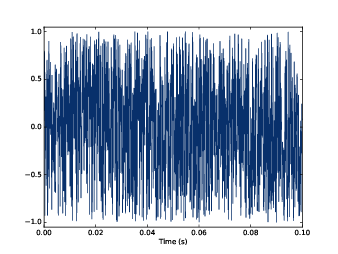

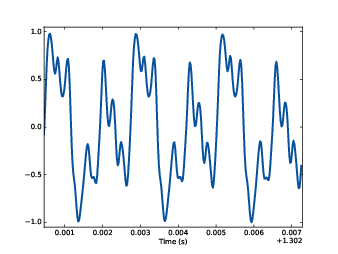

| Segment of a violin recording. |

I'm happy to announce that January is Digital Signal Processing month -- at least according to me. I am planning to celebrate by publishing a sneak preview of chapters from the book I am working on, Think DSP.

Think DSP is the latest in my series Think X (for all X). The series is based on the premise that many topics that are usually presented mathematically are easier to understand and work with computationally.

And Digital Signal Processing is a perfect example. In most books, Chapter 1 is about complex arithmetic, and you don't get to interesting applications for a few hundred pages, if ever.

With a computational approach, you have the option to go top-down: that is, you can start with applications, using packages like NumPy to do the computation, and for each technique, you can learn what it does before you learn how it works.

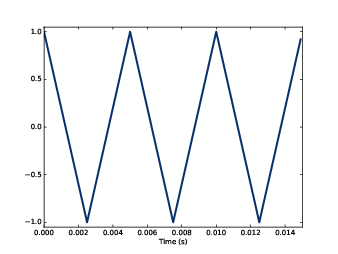

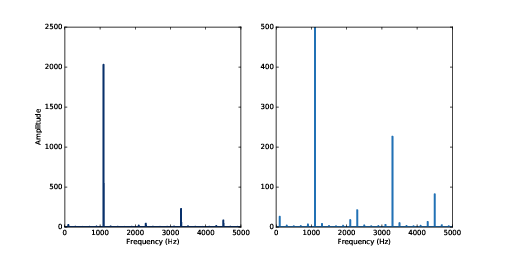

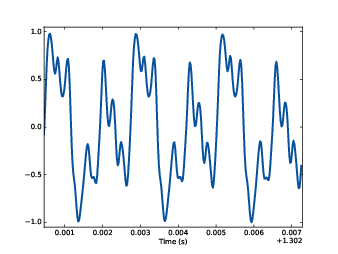

The power of this approach is clearest when you get to the exercises. After the first chapter of Think DSP, readers can generate and listen to complex signals, download (or make) a sound recording, compute and display its spectrum, apply filters, and play back the results. To see what I am talking about, check out this IPython notebook, which contains the examples from Chapter 1:

I think it's a lot more fun than the usual approach.

If you know a little bit about signal processing, you can probably understand the material in the notebook. But if you're a beginner, you should

read Chapter 1 first. Here's an excerpt:

"A signal is a representation of a quantity that varies in time, or space, or both. That definition is pretty abstract, so let’s start with a concrete example: sound. Sound is variation in air pressure. A sound signal represents variations in air pressure over time.

"A microphone is a device that measures these variations and generates an electrical signal that represents sound. A speaker is a device that takes an electrical signal and produces sound. Microphones and speakers are called transducers because they transduce, or convert, signals from one form to another.

"This book is about signal processing, which includes processes for synthesizing, transforming, and analyzing signals. I will focus on sound signals, but the same methods apply to electronic signals and mechanical vibration.

"They also apply to signals that vary in space rather than time, like elevation along a hiking trail. And they apply to signals in more than one dimension, like an image, which you can think of as a signal that varies in two-dimensional space. Or a movie, which is a signal that varies in two-dimensional space and time.

"But we start with simple one-dimensional sound."

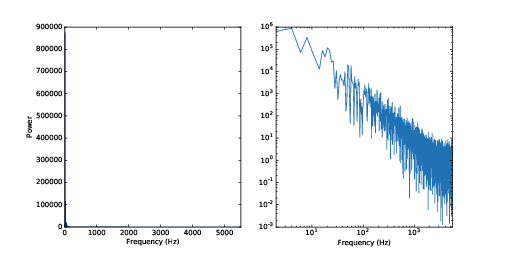

|

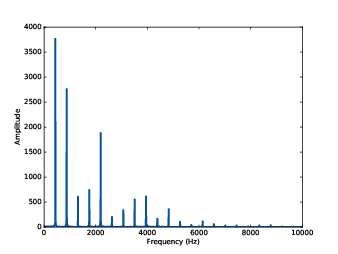

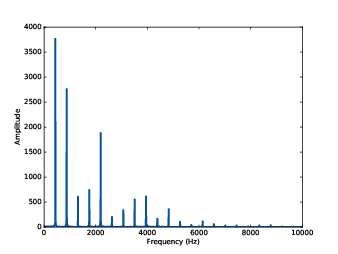

| Spectrum of a segment from a violin recording. |

You can read the rest of the chapter here:

And if you want to read the rest of the book, the current draft is here:

In particular, I welcome comments and suggestions from readers. You can add a comment to this blog, or send me email. If I make a change based on your suggestion, I will add you to the book's contributor list (unless you ask me not to).